K-Nearest Neighbors (Second Classifier)

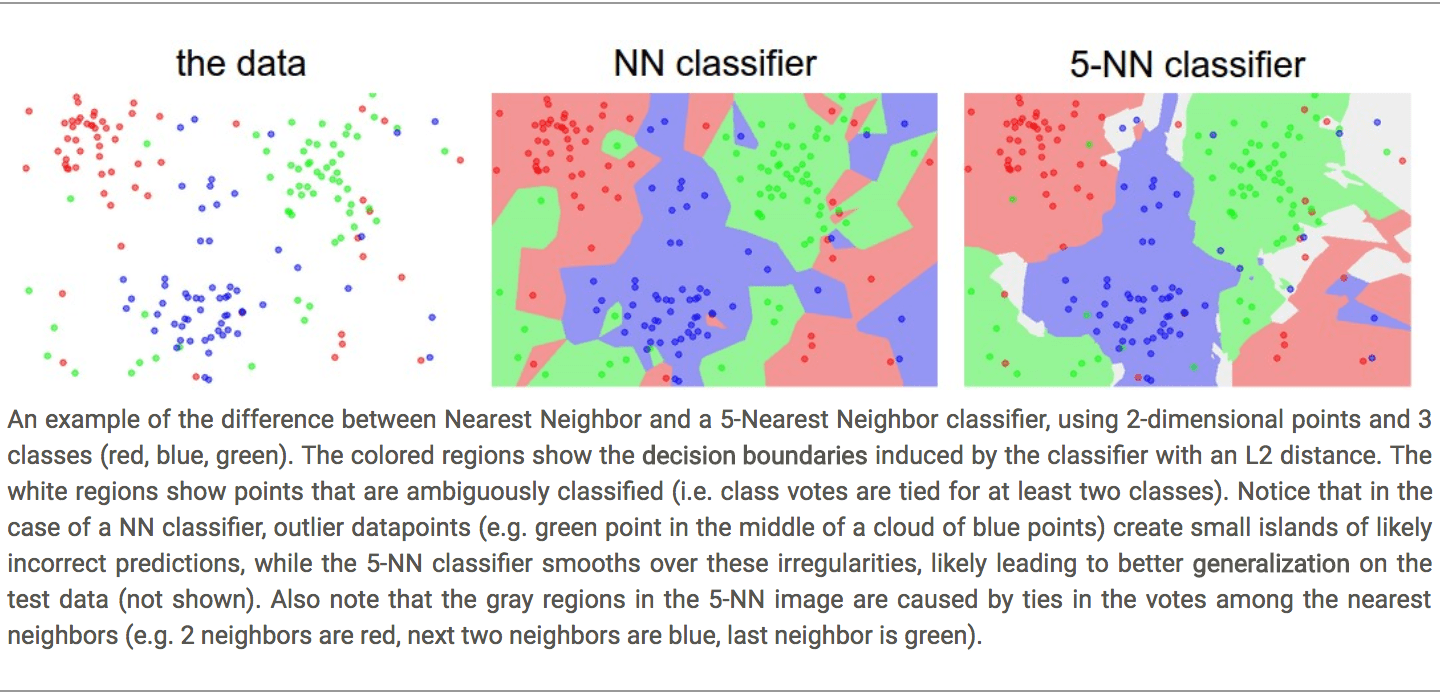

K-Nearest Neighbors is a classifier like nearest neighbors, but instead of calculating the single nearest neighbor, the algorithm takes a majority vote based on multiple (K) nearest neighbors .This smooths out your decision boundaries and leads to better results.

Q/A:

- Q: In the above images, what are the white regions?

- A: White regions are where there was no majority vote between the K-nearest neighbors

Hyperparameter Tuning

The k-nearest neighbor classifier requires a number for k . But what number works best? Additionally, we saw that there are many different distance functions we could have used: L1 norm, L2 norm, there are many other choices we didn’t even consider (e.g. dot products). These choices are called hyperparameters and they come up very often in the design of many Machine Learning algorithms that learn from data. It’s often not obvious what values/settings one should choose.

Some hyperparameters that must be tuned for the k-nearest neighbor classifer include:

The number k

The distance metric used

These hyperparameters are tested and evaluated through a process called cross-validation.

Distance Metrics

Different distance metrics make different assumptions about the geometry or topology you’d expect in the space. Distance metrics:

L1 (Manhattan) Distance

- Depends on your choice of coordinate system

- If you were to rotate the coordinate frame, that would actually change the L1 distance between the points

- If your input features, individual vectors have some important meaning for your task, L1 might be a more natural fit

L2 (Euclidean) distance

The L2 Distance has the geometric interpretation of computing the euclidean distance between two vectors. The distance takes the form:

- If you were to rotate the coordinate frame, it would not affect the L2 distance between points

By using different distance metrics, we can generalize the K-Nearest neighbors classifier to many different types of data - not just vectors or images. For example if you wanted to classify pieces of text - you would have to specify a distance function that measures distances between two paragraphs or two sentences (something like that). By specifying different distance metrics, we can apply this algorithm pretty generally to basically any type of data.

Q/A:

- Q: What is actually happening geometrically when you choose different distance metrics?

- A:

- With L1, decision boundaries tend to follow the coordinate axes, because L1 depends on choice of coordinate system.

- L2 doesn’t care about choice of coordinate system, it just puts the boundaries where it thinks they should fall naturally

- Q: Where would L1 distance be preferable to using L2 distance?

- A: Problem dependent. Since L1 is dependent on coordinates of your data, L1 would be good if you know you have a vector, and the individual elements of that vector have meaning

- Classifying employees and diff elements of that vector correspond to diff features/aspects of an employee (Salary, Years worked).

- This is a hyperparameter - so the best answer is to try both L1 and L2 and see which works betters.

- A: Problem dependent. Since L1 is dependent on coordinates of your data, L1 would be good if you know you have a vector, and the individual elements of that vector have meaning